Microsoft Copilot Chat

Copilot Chat is a generative artificial intelligence (AI) tool created by Microsoft. It can be used to brainstorm and get answers to questions, organize and summarize documents and generate text, images and more.

There are two versions of Copilot Chat:

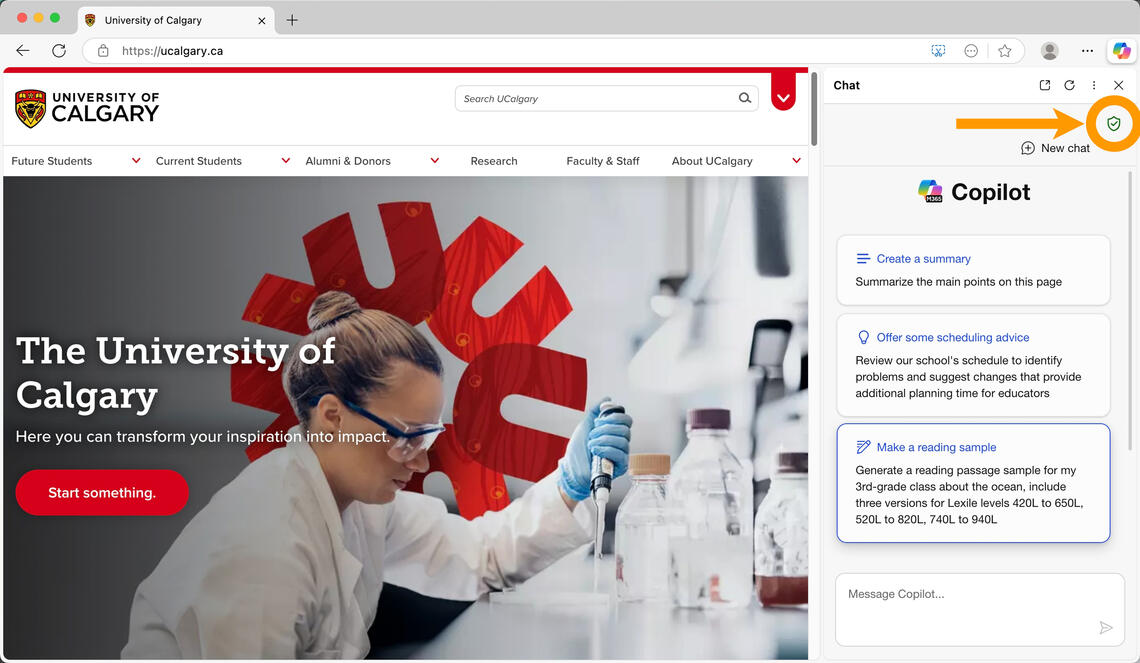

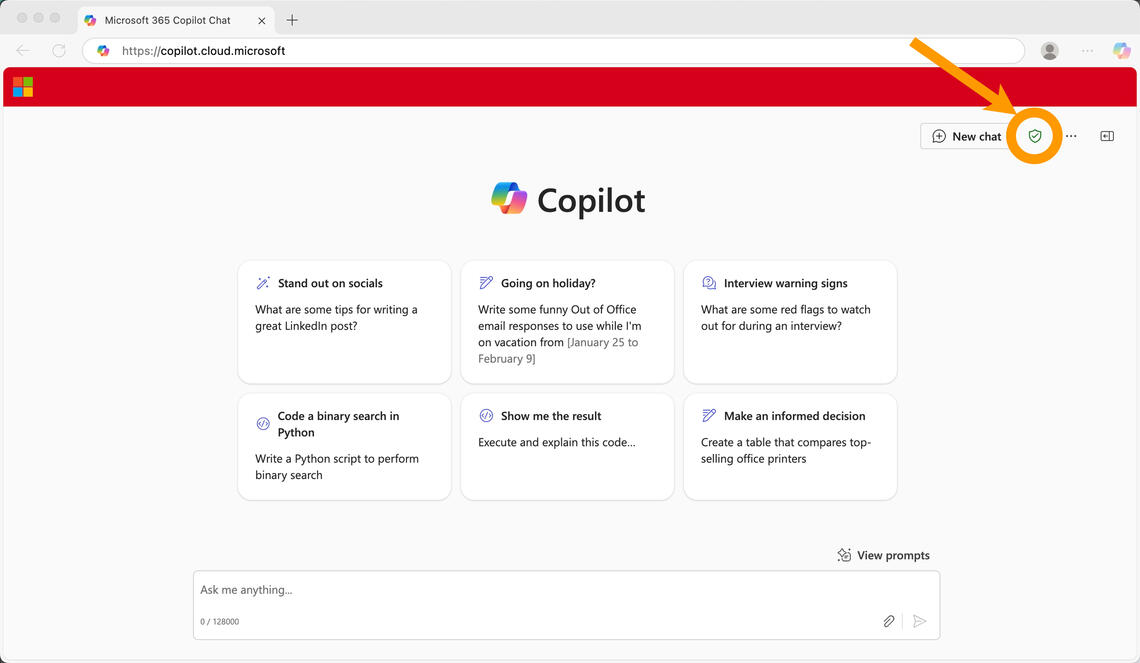

- a secure version is available for anyone with a UCalgary IT account. To keep your data protected, you must sign in using your UCalgary credentials. You’ll know you’re using the secure version if you see the green shield (see images below). With the secure version of Microsoft Copilot Chat, you can safely enter level 1, 2 or 3 information.

- the public version can only be used for public information classified as level 1.

You can access Microsoft Copilot Chat in three ways: in the Microsoft Edge browser, where Copilot Chat is built-in, by visiting the Copilot Chat website in any web browser, or by using the desktop icon on your managed UCalgary device.

Sharing confidential or personal information in any chatbot or automated system comes with risks. Using the secure version of Microsoft Copilot Chat offers better security, privacy and protection of data. It also complies with UCalgary policies and standards.

Copilot Chat in Edge browser: the green shield means you are using the secure version

Copilot Chat website: the green shield means you are using the secure version

Understanding Information Security at UCalgary

UCalgary classifies information into four security levels to ensure proper protection

Level 1 (public)

Safe to share with anyone (e.g. website content).

Level 2 (internal)

For UCalgary use only, but not highly sensitive (e.g. internal de-identified reports).

Level 3 (confidential)

Restricted to specific groups, unauthorized access could cause harm (e.g. personal information of students, faculty or staff).

Level 4 (restricted)

Highly sensitive information requiring strict security measures (e.g. credit card or personal health information).

For more details, visit the Information Security Classification Standard.

Ethical Use of Microsoft Copilot Chat

As you are using Microsoft Copilot Chat, it is important to understand the ethical guidelines around its use.

Some key guidelines include:

- Use Microsoft Copilot Chat ethically, in accordance with applicable laws and UCalgary’s Acceptable Use of Electronic Resources and Information Policy and Information Asset Management Policy.

- Obtain consent directly from individuals prior to inputting or disclosing their personal information into an AI tool. For questions, please contact foip@ucalgary.ca.

- Ensure that the rights of creators are respected and that you have the appropriate written permission to input or disclose materials protected by copyright or other intellectual property rights into an AI tool. For questions, please contact copyright@ucalgary.ca.

- Do not use an AI tool to make decisions that will have a serious impact on the rights of an individual, or that could have serious consequences for an individual’s life, education, career, dignity or autonomy.

- Be transparent and make sure to disclose when you use AI to generate information, reports, summaries, images, etc.

- Carefully review all generated content for accuracy before use.

FAQs

No. Microsoft has renamed Office 365 to Microsoft 365 Copilot; this is different than the Copilot Chat tool.

Office 365 Copilot uses a similar model to Microsoft Copilot Chat. However, it also incorporates documents stored in Office 365 (SharePoint, Teams and OneDrive) that a user has access to. It is also integrated directly into Office 365 apps. Microsoft 365 Copilot is still being evaluated by IT.

While additional risks may arise if you intend to input, disclose or otherwise provide confidential or personal information into a software or other automated system, using the secure version of Copilot Chat that requires you to login offers better security, privacy and protection of data.

The public version of Copilot Chat does not ensure your data is secure. This includes not knowing how information is being stored, shared, and used by each platform’s learning algorithm, potentially putting sensitive UCalgary data at risk. It also raises concerns regarding the potential for inaccuracy, bias or misuse, and a lack of transparency regarding the ownership or management of the platform.

UCalgary advises caution when using other generative AI tools as it is not certain how these tools are storing, sharing or using inputted information.

Only level 1 (public) information should be used in other AI chatbots, as they don’t offer the same protections as the secure version of Microsoft Copilot Chat. With the secure version of Microsoft Copilot Chat, you can safely enter level 1, 2 or 3 information.

Level 4 information should never be entered into any AI tool or chatbot.

If you want to acquire or use other generative AI platforms, please follow the Supply Chain Software Acquisition process.

Please be aware: as the end-user/requestor, you are responsible for ensuring that the chatbot you use will be collecting, storing or managing information securely and in accordance with UCalgary’s policies and procedures. Please also refer to the Legal Services Guidelines for the Software Acquisition Process for more information on privacy and information security best practises.

The integrated Microsoft 365 version of Copilot Chat is still being evaluated by IT.

Yes. Microsoft Copilot Chat is available through the Microsoft 365 Mobile App. You must use your University of Calgary IT account to sign into the app to access Copilot Chat.

AI Essentials: Building Fluency and Productivity with Modern AI Tools

Discover how to harness the power of AI to transform productivity and simplify tasks. You will explore the fundamentals of AI, from its evolution and key concepts to ethical considerations and practical applications. You will dive into generative AI, advanced internet search techniques, and real-world scenarios that highlight AI’s impact on industries and inclusivity. This course will also expose you to practical use cases of AI through tools like Azure Cognitive Services, Microsoft Copilot, and Office 365 Copilot, helping you confidently integrate AI into your daily life and work.

AI Consultation Form

The Centre for Artificial Intelligence Ethics, Literacy and Integrity (CAEILI) offers guidance around the use of artificial intelligence on a request basis.

Fill out the consultation form if you need:

- Presentation about AI use

- Assistance with a research project

- Staff training on the uses of AI

- Presentation about academic integrity

- Support with classwork